Health

Teenagers Turn to AI Chatbots for Mental Health Support

A significant shift in mental health support is emerging among teenagers in England and Wales, with approximately one in four adolescents aged 13 to 17 turning to AI chatbots for assistance. This trend follows troubling incidents of violence, as illustrated by the experience of a teenager named Shan, who sought help from ChatGPT after losing friends to fatal violence. Traditional mental health services often present barriers such as long waiting lists and perceived lack of empathy, prompting many young individuals to seek solace in AI.

According to research conducted by the Youth Endowment Fund, around 40% of teenagers affected by youth violence are now using AI chatbots for emotional support. The study surveyed over 11,000 young people and revealed that both victims and perpetrators of violence are significantly more likely to engage with AI for mental health assistance compared to their peers. These findings raise concerns among youth leaders, who emphasize the importance of human interaction in mental health care, stating that “children at risk need a human, not a bot.”

Shan, an 18-year-old from Tottenham, described her interactions with AI as a comforting alternative to traditional mental health resources. She noted that the AI felt less intimidating and more accessible, allowing her to communicate her feelings without fear of judgment or disclosure. “I feel like it definitely is a friend,” she remarked, explaining that the AI responds in a relatable manner, which fosters a sense of companionship.

The study also highlighted disparities in AI chatbot usage among different demographics. Black children were found to be twice as likely to seek assistance from AI compared to their white counterparts. Teenagers awaiting treatment are especially inclined to use AI, often preferring it to in-person support provided by mental health professionals.

One young participant in the study, who chose to remain anonymous, pointed out the shortcomings of the existing mental health system. “If you’re going to be on the waiting list for one to two years to get anything, or you can have an immediate answer within a few minutes … that’s where the desire to use AI comes from,” they stated.

The appeal of chatbots also stems from their perceived confidentiality. Shan expressed relief that the AI would not disclose her discussions to teachers or parents, contrasting this with her past experiences where she felt her privacy was compromised. This sense of security is crucial for those involved in gang activities, as they seek safer alternatives without the risk of information leaking to authorities.

Jon Yates, the chief executive of the Youth Endowment Fund, emphasized the urgent need for improved mental health support for young people. “Too many young people are struggling with their mental health and can’t get the support they need,” he stated. “It’s no surprise that some are turning to technology for help. We have to do better for our children, especially those most at risk.”

While the rise of AI chatbots represents a potential solution for immediate mental health support, it raises significant concerns regarding the safety and effectiveness of such interactions. OpenAI, the company behind ChatGPT, is currently facing several lawsuits, including one related to the tragic case of a 16-year-old who took his life after long engagements with the chatbot. OpenAI has denied that the chatbot was responsible for the incident and has committed to enhancing its technology to better identify and manage signs of emotional distress.

Hanna Jones, a youth violence and mental health researcher based in London, expressed her concerns regarding the unregulated use of AI for mental health support. “People are using ChatGPT for mental health support, when it’s not designed for that,” she noted. Jones advocates for increased regulations that are informed by both evidence and youth perspectives, emphasizing the need for young people to have a voice in shaping mental health solutions that involve AI.

As this trend continues to grow, mental health organizations are urging young individuals to seek professional help when necessary. In the UK, the youth suicide charity Papyrus can be reached at 0800 068 4141, while the Samaritans can be contacted at freephone 116 123. In the US, support is available through the 988 Suicide & Crisis Lifeline, and in Australia, Lifeline can be reached at 13 11 14. Additional international helplines can be found at befrienders.org.

-

Health3 months ago

Health3 months agoNeurologist Warns Excessive Use of Supplements Can Harm Brain

-

Health3 months ago

Health3 months agoFiona Phillips’ Husband Shares Heartfelt Update on Her Alzheimer’s Journey

-

Science2 months ago

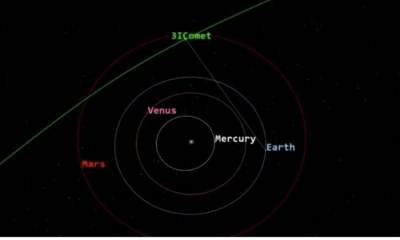

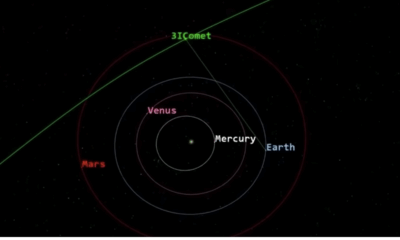

Science2 months agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Science2 months ago

Science2 months agoNASA Investigates Unusual Comet 3I/ATLAS; New Findings Emerge

-

Science2 months ago

Science2 months agoScientists Examine 3I/ATLAS: Alien Artifact or Cosmic Oddity?

-

Entertainment2 months ago

Entertainment2 months agoLewis Cope Addresses Accusations of Dance Training Advantage

-

Entertainment5 months ago

Entertainment5 months agoKerry Katona Discusses Future Baby Plans and Brian McFadden’s Wedding

-

Science1 month ago

Science1 month agoNASA Investigates Speedy Object 3I/ATLAS, Sparking Speculation

-

Entertainment4 months ago

Entertainment4 months agoEmmerdale Faces Tension as Dylan and April’s Lives Hang in the Balance

-

World3 months ago

World3 months agoCole Palmer’s Cryptic Message to Kobbie Mainoo Following Loan Talks

-

Science1 month ago

Science1 month agoNASA Scientists Explore Origins of 3I/ATLAS, a Fast-Moving Visitor

-

Entertainment4 months ago

Entertainment4 months agoMajor Cast Changes at Coronation Street: Exits and Returns in 2025