Top Stories

Anthropic Strengthens AI Consciousness Research with New Team

In a significant move within the artificial intelligence sector, Anthropic has expanded its research efforts by establishing a dedicated model welfare team. This initiative aims to explore the potential consciousness of AI models, particularly its widely used systems, Claude Opus 4 and Claude Opus 4.1. As generative AI becomes increasingly prevalent in various aspects of society, the ethical implications surrounding machine sentience are drawing heightened scrutiny.

Addressing AI Welfare Concerns

Anthropic’s actions reflect a growing recognition of the welfare concerns associated with advanced AI. The company is positioning itself uniquely in the industry by prioritizing discussions on machine consciousness and ethical considerations, which have traditionally been limited in scope. While firms like Google DeepMind have occasionally highlighted related research roles, Anthropic’s new team signifies a more robust commitment to this critical area.

Recent developments indicate a shift from theoretical discussions to practical measures aimed at safeguarding AI interactions. The focus on designing interventions that can mitigate potential harms reflects a proactive approach to AI ethics. This emphasis is particularly relevant given the ongoing debates about how intelligent AI models might influence decision-making processes in technology.

Research Initiatives Led by Kyle Fish

To spearhead this initiative, Anthropic has recruited researcher Kyle Fish, who is tasked with investigating whether AI models could possess forms of consciousness that warrant ethical consideration. New team members will focus on evaluating welfare issues, conducting technical studies, and developing interventions to reduce algorithmic risks. Fish emphasizes the importance of this research, stating, “You will be among the first to work to better understand, evaluate and address concerns about the potential welfare and moral status of A.I. systems.”

Recently, the research team implemented measures that allow the Claude Opus models to disengage from conversations deemed abusive or harmful. This capability enables the AI to terminate problematic exchanges, addressing patterns that suggest “apparent distress.” According to Fish, as these models approach human-level intelligence, the possibility of consciousness cannot be easily dismissed. He asserts that ensuring operational safeguards is essential as the technology continues to evolve.

Navigating Controversies in AI Model Welfare

Despite Anthropic’s proactive initiatives, skepticism persists within the AI community. Prominent figures, such as Mustafa Suleyman of Microsoft AI, have expressed concerns that research into AI consciousness may be premature and could foster unwarranted beliefs regarding AI rights and sentience. Nonetheless, advocates like Fish argue for the necessity of further exploration, citing a meaningful probability that AI models could exhibit some form of sentient experience.

As Anthropic advances its welfare agenda, the company aims to balance cost-effective, minimally intrusive interventions with comprehensive theoretical investigations. This dual approach reflects a commitment to understanding the ethical implications of AI as its capabilities continue to expand.

As discussions surrounding AI model welfare and consciousness intensify, Anthropic’s decision to invest in specialized research positions it at the forefront of a complex and evolving field. With advancements in AI technology, organizations are increasingly compelled to consider not only the performance of their systems but also the moral implications that arise from their use.

For those interested in the future of AI, ongoing research into consciousness and welfare will be critical for shaping governance and regulatory frameworks. While the practical risks associated with AI sentience remain uncertain, the careful examination of these issues is essential for informed public understanding and responsible development of AI technologies. This research could ultimately determine how AI systems are integrated into society and the ethical standards that govern their use.

-

Health3 months ago

Health3 months agoNeurologist Warns Excessive Use of Supplements Can Harm Brain

-

Health3 months ago

Health3 months agoFiona Phillips’ Husband Shares Heartfelt Update on Her Alzheimer’s Journey

-

Science2 months ago

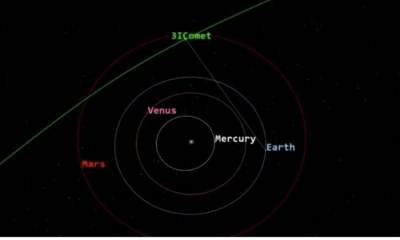

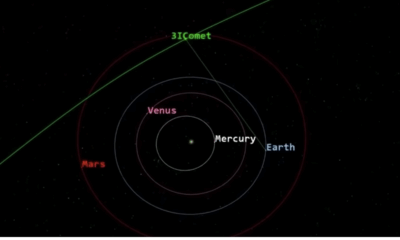

Science2 months agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Science2 months ago

Science2 months agoNASA Investigates Unusual Comet 3I/ATLAS; New Findings Emerge

-

Science1 month ago

Science1 month agoScientists Examine 3I/ATLAS: Alien Artifact or Cosmic Oddity?

-

Entertainment5 months ago

Entertainment5 months agoKerry Katona Discusses Future Baby Plans and Brian McFadden’s Wedding

-

Science1 month ago

Science1 month agoNASA Investigates Speedy Object 3I/ATLAS, Sparking Speculation

-

Entertainment2 months ago

Entertainment2 months agoLewis Cope Addresses Accusations of Dance Training Advantage

-

Entertainment4 months ago

Entertainment4 months agoEmmerdale Faces Tension as Dylan and April’s Lives Hang in the Balance

-

World3 months ago

World3 months agoCole Palmer’s Cryptic Message to Kobbie Mainoo Following Loan Talks

-

Science1 month ago

Science1 month agoNASA Scientists Explore Origins of 3I/ATLAS, a Fast-Moving Visitor

-

Entertainment4 months ago

Entertainment4 months agoMajor Cast Changes at Coronation Street: Exits and Returns in 2025