Science

UK Families Urged to Create Safe Words to Combat AI Deepfake Scams

Families across the United Kingdom are being advised to establish safe words and passwords in response to a surge in artificial intelligence (AI) deepfake scams. These scams, which utilize highly realistic videos and audio, are increasingly targeting not only celebrities but also everyday individuals. As AI technology evolves, distinguishing between genuine and manipulated content has become more challenging, raising significant concerns regarding public safety.

The rise of deepfake scams has prompted calls for proactive measures. Individuals are urged to create unique safe words that can help protect against impersonation attempts. According to a recent report in the Mirror, hackers are exploiting advanced AI capabilities to clone voices and images, allowing them to conduct fraudulent video calls while demanding money from unsuspecting victims.

The use of AI-generated content has gained traction on platforms such as TikTok, where users frequently encounter videos featuring notable figures like Jake Paul, Tupac, and Robin Williams. These videos can be so convincing that it often takes time for viewers to discern their authenticity. One TikTok user, known as Chelsea (@chelseasexplainsitall), demonstrated how easy it is to create fake videos using an app called Sora. This text-to-video platform, which has over one million downloads, can generate realistic videos in under a minute.

In her video, Chelsea emphasized the importance of safe words among families. “You and your family need a safe word,” she said, noting that while deepfake technology can mimic faces and voices, it cannot recognize secret phrases. She warned, “These deepfakes are crazy; the scams are going to be insane. It’s so realistic, it’s actually frightening.”

Concerns about deepfake technology extend beyond impersonation scams. Experts have also raised alarms about the use of deceased public figures in AI-generated content. This not only poses ethical questions but also risks spreading misinformation, as these individuals cannot consent to the use of their likeness. A representative from OpenAI commented to NBC News that while there are strong free speech interests in depicting historical figures, public figures and their estates should have control over their likenesses. “For public figures who are recently deceased, authorized representatives or owners of their estate can request that their likeness not be used in Sora cameos,” the spokesperson stated.

Chelsea’s TikTok video has garnered significant attention, amassing over 41,000 likes and more than 700 comments. Users have expressed their concerns regarding the increasing prevalence of AI scams. One commenter noted, “Eventually, we will not be able to differentiate what’s real and what’s not real.” Another added, “As someone working in banking, protect yourself and your loved ones.” A third user emphasized the need for AI regulations, stating, “We need more data literacy education to be able to distinguish from real and AI-generated content.”

As AI technology continues to advance, the call for heightened awareness and protective measures becomes increasingly urgent. Families are encouraged to discuss and implement safe words to safeguard against the potential threats posed by deepfake scams.

-

Health3 months ago

Health3 months agoNeurologist Warns Excessive Use of Supplements Can Harm Brain

-

Health3 months ago

Health3 months agoFiona Phillips’ Husband Shares Heartfelt Update on Her Alzheimer’s Journey

-

Science2 months ago

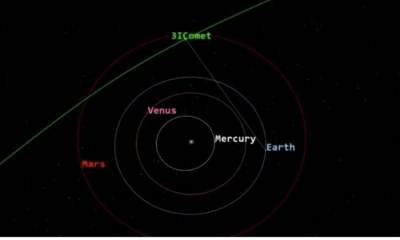

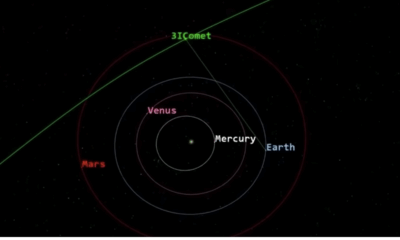

Science2 months agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Science2 months ago

Science2 months agoNASA Investigates Unusual Comet 3I/ATLAS; New Findings Emerge

-

Science1 month ago

Science1 month agoScientists Examine 3I/ATLAS: Alien Artifact or Cosmic Oddity?

-

Entertainment5 months ago

Entertainment5 months agoKerry Katona Discusses Future Baby Plans and Brian McFadden’s Wedding

-

Science1 month ago

Science1 month agoNASA Investigates Speedy Object 3I/ATLAS, Sparking Speculation

-

Entertainment4 months ago

Entertainment4 months agoEmmerdale Faces Tension as Dylan and April’s Lives Hang in the Balance

-

World3 months ago

World3 months agoCole Palmer’s Cryptic Message to Kobbie Mainoo Following Loan Talks

-

Science1 month ago

Science1 month agoNASA Scientists Explore Origins of 3I/ATLAS, a Fast-Moving Visitor

-

Entertainment2 months ago

Entertainment2 months agoLewis Cope Addresses Accusations of Dance Training Advantage

-

Entertainment3 months ago

Entertainment3 months agoMajor Cast Changes at Coronation Street: Exits and Returns in 2025