Science

NYU Unveils AI-Powered Malware, PromptLock, Raising Cybersecurity Alarms

Researchers at New York University (NYU) have developed a prototype malware known as “PromptLock,” designed to probe security vulnerabilities associated with artificial intelligence (AI) in cybersecurity. This malware, discovered by ESET on VirusTotal, is not intended for malicious use but serves as a controlled academic experiment conducted by NYU’s Tandon School of Engineering. The initiative aims to explore the implications of AI-driven ransomware, highlighting the critical balance between the advancements in AI technology and the pressing need for enhanced digital security measures.

PromptLock’s introduction has stimulated discussions among cybersecurity experts and policymakers, particularly as concerns about AI misuse continue to grow. In recent weeks, the media has reported on similar issues surrounding large language models (LLMs) and their potential for exploitation by cybercriminals. Previous demonstrations have shown how AI can facilitate simpler hacking techniques; however, PromptLock distinguishes itself by its capability to autonomously strategize and execute ransomware tasks. This places the software in a unique position within the evolving landscape of cybersecurity threats.

Understanding PromptLock’s Development and Capabilities

The creation of PromptLock stems from the efforts of NYU researchers who sought to illustrate the potential dangers of AI-based threats. Led by Professor Ramesh Karri, the team received support from agencies including the Department of Energy and the National Science Foundation. Utilizing open-source tools and commodity hardware, they aimed to create a practical example of how future threats could manifest. As the project’s lead author, Md Raz explained, the design demonstrates how LLMs can script and automate attacks with minimal human involvement.

PromptLock employs an open-weight version of OpenAI’s ChatGPT, integrating natural language prompts within its binary. This allows the malware to perform complex operations such as system reconnaissance, data exfiltration, and the generation of personalized ransom notes. The use of LLMs enables the malware to produce diverse characteristics in each instance, complicating detection efforts compared to traditional malware.

Implications for Cybersecurity and Future Challenges

The research surrounding PromptLock reveals significant challenges in identifying and countering AI-driven threats. The polymorphic nature and personalization made possible by LLMs pose a formidable obstacle for security professionals. As emphasized by both NYU and ESET, while PromptLock itself is a controlled academic demonstration, its existence underscores the ease with which malicious actors could adapt these techniques for real-world exploitation.

Debates concerning regulatory responses and technical safeguards for LLMs are ongoing, with policy approaches varying widely across different regions and administrations. Although PromptLock is not an operational threat, its academic context sheds light on emerging risks associated with AI misuse. The media coverage following its unveiling has raised awareness within the cybersecurity community, demonstrating the urgency of adapting to these developments.

Recent incidents, such as the use of Anthropic’s Claude LLM in extortion cases, illustrate the need for proactive measures within the security sector. Experts stress the importance of implementing effective defenses against prompt injection and jailbreak attempts, as these vulnerabilities can be exploited by attackers. The sophistication of LLMs makes tailored ransomware campaigns accessible to low-skilled individuals, raising alarms about the future of cybersecurity.

As organizations and security professionals navigate these evolving threats, understanding the mechanics behind AI-assisted malware like PromptLock becomes crucial. The lessons learned from this research highlight the importance of collaboration between academia and industry to anticipate and address emerging risks. Both AI developers and security defenders must remain vigilant, recognizing the rapid evolution of attack models and the pressing need for robust cybersecurity strategies in an increasingly digital world.

-

Health3 months ago

Health3 months agoNeurologist Warns Excessive Use of Supplements Can Harm Brain

-

Health3 months ago

Health3 months agoFiona Phillips’ Husband Shares Heartfelt Update on Her Alzheimer’s Journey

-

Science2 months ago

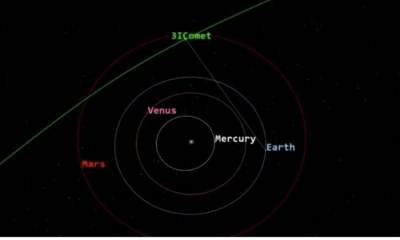

Science2 months agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Science2 months ago

Science2 months agoNASA Investigates Unusual Comet 3I/ATLAS; New Findings Emerge

-

Science1 month ago

Science1 month agoScientists Examine 3I/ATLAS: Alien Artifact or Cosmic Oddity?

-

Entertainment5 months ago

Entertainment5 months agoKerry Katona Discusses Future Baby Plans and Brian McFadden’s Wedding

-

Science1 month ago

Science1 month agoNASA Investigates Speedy Object 3I/ATLAS, Sparking Speculation

-

Entertainment4 months ago

Entertainment4 months agoEmmerdale Faces Tension as Dylan and April’s Lives Hang in the Balance

-

World3 months ago

World3 months agoCole Palmer’s Cryptic Message to Kobbie Mainoo Following Loan Talks

-

Science1 month ago

Science1 month agoNASA Scientists Explore Origins of 3I/ATLAS, a Fast-Moving Visitor

-

Entertainment2 months ago

Entertainment2 months agoLewis Cope Addresses Accusations of Dance Training Advantage

-

Entertainment3 months ago

Entertainment3 months agoMajor Cast Changes at Coronation Street: Exits and Returns in 2025