Science

Understanding AI Detectors: How They Work and Their Reliability

The rise of generative AI has transformed how written content is produced, with chatbots like ChatGPT, Gemini, and Grok generating text within seconds. This capability has prompted concerns among educators about the potential misuse of AI in academic settings, as students may leverage these tools for shortcuts in their assignments. To combat this trend, AI content detectors have emerged, claiming to effectively distinguish between human-written and AI-generated text.

These detection tools utilize advanced probabilistic models that evaluate text based on metrics like perplexity and burstiness. Perplexity measures the predictability of a sentence, while burstiness refers to the variation in sentence length. AI-generated content typically exhibits low perplexity and a uniformity in sentence structure, which can make it easier to identify. Nonetheless, as AI chatbots evolve, accurately flagging AI content has become increasingly challenging.

Challenges in Detecting AI-Generated Text

The sophistication of generative AI models means they can produce text that is both coherent and stylistically polished, often mimicking human writing patterns. For example, Abraham Lincoln’s famous Gettysburg Address, a text created in an era before AI, was tested against three popular AI detection tools. While QuillBot and Copyleaks AI correctly identified it as human-generated, ZeroGPT mistakenly classified it as 96.4% AI-generated. This discrepancy highlights a significant issue: the potential for false positives in AI detection.

Users across various online platforms, including Reddit, have reported similar experiences, indicating that reliance on AI detectors alone is insufficient. Educators and users alike are encouraged to supplement these tools with manual reviews of text. Key indicators of AI-generated content include overly formal language, unnatural sentence structures, and vague phrasing such as “It is commonly believed…” or “Some might argue…”

Best Practices for Educators and Users

For educators concerned about the integrity of academic work, examining the document’s revision history may provide additional insights. Unexplained increases in word count might suggest the involvement of AI tools. Furthermore, understanding the limitations of AI detectors is crucial; while they can provide a useful starting point, they should not be regarded as infallible.

As AI continues to advance, the debate surrounding its impact on education and content creation will likely intensify. The challenge remains to balance the benefits of AI tools with the necessity of maintaining academic integrity. In navigating this landscape, both educators and students must remain vigilant and informed about the evolving capabilities of generative AI and the tools designed to detect its output.

-

Health3 months ago

Health3 months agoNeurologist Warns Excessive Use of Supplements Can Harm Brain

-

Health3 months ago

Health3 months agoFiona Phillips’ Husband Shares Heartfelt Update on Her Alzheimer’s Journey

-

Science2 months ago

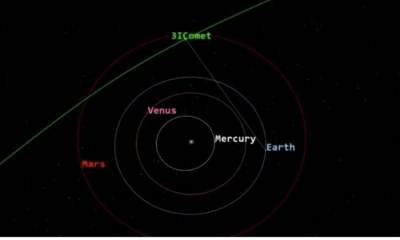

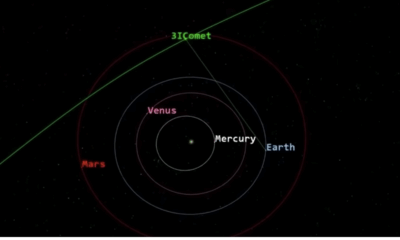

Science2 months agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Science2 months ago

Science2 months agoNASA Investigates Unusual Comet 3I/ATLAS; New Findings Emerge

-

Science1 month ago

Science1 month agoScientists Examine 3I/ATLAS: Alien Artifact or Cosmic Oddity?

-

Entertainment5 months ago

Entertainment5 months agoKerry Katona Discusses Future Baby Plans and Brian McFadden’s Wedding

-

Science1 month ago

Science1 month agoNASA Investigates Speedy Object 3I/ATLAS, Sparking Speculation

-

Entertainment4 months ago

Entertainment4 months agoEmmerdale Faces Tension as Dylan and April’s Lives Hang in the Balance

-

World3 months ago

World3 months agoCole Palmer’s Cryptic Message to Kobbie Mainoo Following Loan Talks

-

Science1 month ago

Science1 month agoNASA Scientists Explore Origins of 3I/ATLAS, a Fast-Moving Visitor

-

Entertainment2 months ago

Entertainment2 months agoLewis Cope Addresses Accusations of Dance Training Advantage

-

Entertainment3 months ago

Entertainment3 months agoMajor Cast Changes at Coronation Street: Exits and Returns in 2025