Science

Edinburgh Researchers Unveil Tech to Boost AI Speed Tenfold

Researchers at the University of Edinburgh have developed a groundbreaking system that could enhance the speed of artificial intelligence (AI) data processing by a factor of ten. This advancement is expected to significantly impact industries reliant on large language models (LLMs), such as finance, healthcare, and customer service applications like chatbots.

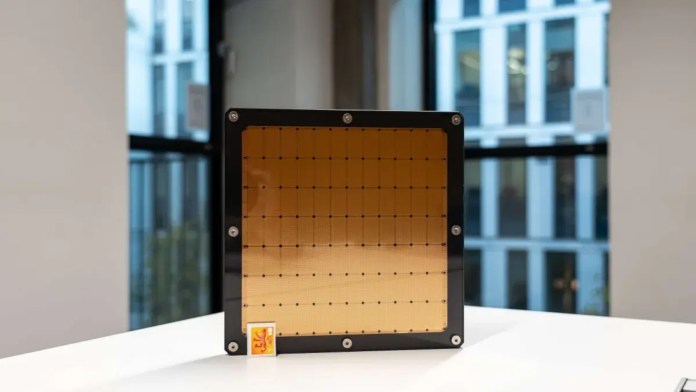

The innovation is rooted in the use of wafer-scale computer chips, which are the largest of their kind and comparable in size to a medium chopping board. These chips can perform multiple calculations simultaneously due to their expansive on-chip memory and design. Currently, most AI systems utilize graphics processing units (GPUs) that are interconnected in networks, requiring data to traverse between different chips. This method can limit performance and efficiency.

WaferLLM: A New Era for AI Processing

The team at the University of Edinburgh has introduced a software solution specifically designed for wafer-scale chips, called WaferLLM. In tests conducted at the EPCC, a supercomputing centre at the university, the third-generation wafer-scale processors demonstrated a tenfold increase in response speed compared to a cluster of 16 GPUs. Additionally, these chips were found to be considerably more energy-efficient, consuming about half the energy of GPUs while operating LLMs.

According to Dr. Luo Mai, the lead researcher and reader at the university’s School of Informatics, the potential of wafer-scale computing has been remarkable, yet software limitations have hindered its practical application. He stated, “With WaferLLM, we show that the right software design can unlock that potential, delivering real gains in speed and energy efficiency for large language models.”

The research was peer-reviewed and presented at an operating systems and design symposium in July 2023. Professor Mark Parsons, director of EPCC and dean of research computing at the university, emphasized the significance of the findings, noting, “Dr. Mai’s work is truly ground-breaking and shows how the cost of inference can be massively reduced.”

Future Implications and Open-Source Accessibility

The implications of this research extend beyond immediate performance improvements. The team has made their findings available as open-source software, allowing other developers to create applications that leverage wafer-scale technology. This collaborative approach could foster innovation across various sectors that depend on rapid data analysis and real-time intelligence.

As industries increasingly rely on AI for critical decision-making processes, the advancements made by the University of Edinburgh could pave the way for a new generation of AI infrastructure capable of meeting the demands of modern society. The potential applications in science, healthcare, education, and everyday life are substantial, marking a significant step forward in the field of artificial intelligence.

-

Health3 months ago

Health3 months agoNeurologist Warns Excessive Use of Supplements Can Harm Brain

-

Health4 months ago

Health4 months agoFiona Phillips’ Husband Shares Heartfelt Update on Her Alzheimer’s Journey

-

Science2 months ago

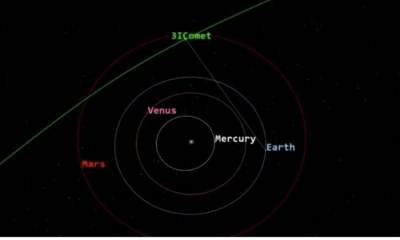

Science2 months agoBrian Cox Addresses Claims of Alien Probe in 3I/ATLAS Discovery

-

Science2 months ago

Science2 months agoNASA Investigates Unusual Comet 3I/ATLAS; New Findings Emerge

-

Science2 months ago

Science2 months agoScientists Examine 3I/ATLAS: Alien Artifact or Cosmic Oddity?

-

Entertainment2 months ago

Entertainment2 months agoLewis Cope Addresses Accusations of Dance Training Advantage

-

Entertainment5 months ago

Entertainment5 months agoKerry Katona Discusses Future Baby Plans and Brian McFadden’s Wedding

-

Science2 months ago

Science2 months agoNASA Investigates Speedy Object 3I/ATLAS, Sparking Speculation

-

Entertainment5 months ago

Entertainment5 months agoEmmerdale Faces Tension as Dylan and April’s Lives Hang in the Balance

-

Entertainment2 days ago

Entertainment2 days agoAndrew Pierce Confirms Departure from ITV’s Good Morning Britain

-

World3 months ago

World3 months agoCole Palmer’s Cryptic Message to Kobbie Mainoo Following Loan Talks

-

World4 weeks ago

World4 weeks agoBailey and Rebecca Announce Heartbreaking Split After MAFS Reunion